Sustainable AI and Green Computing: Reducing the Environmental Impact of Large-Scale Models with Energy-Efficient Techniques

DOI:

https://doi.org/10.26438/ijsrnsc.v13i3.276Keywords:

green computing, sustainable AI, carbon footprint, energy-efficient algorithms, environmental impactAbstract

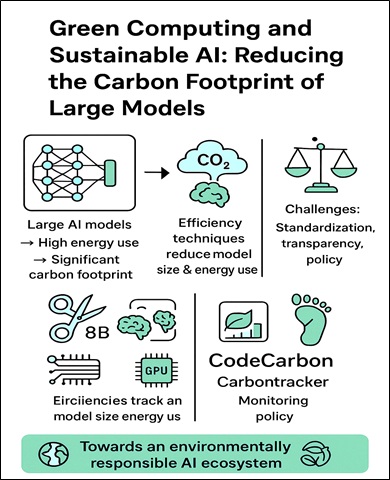

Artificial intelligence (AI) has become an integral part of modern technology, driving advances across numerous sectors, including healthcare, finance, transportation, and entertainment. However, the rapid growth in AI model complexity particularly the rise of large language models has sparked concerns over their substantial energy consumption and associated carbon emissions. This paper explores the intersection of green computing and sustainable AI, focusing on the carbon footprint of large-scale models, energy-efficient algorithmic solutions, and emerging tools and frameworks designed to measure and mitigate environmental impact. We review current approaches such as model pruning, quantization, knowledge distillation, and efficient hardware, and discuss prominent tools like CodeCarbon and Carbontracker that enable researchers to track and reduce emissions. The paper also highlights ongoing challenges related to standardization, transparency, and policy, while outlining future research directions for creating an environmentally responsible AI ecosystem. By advancing sustainable AI practices, the research community can align innovation with environmental stewardship, ensuring that technological progress supports global climate goals.

References

Strubell, E., Ganesh, A., & McCallum, A., “Energy and policy considerations for deep learning in NLP,” Proc. of the 57th Annual Meeting of the Association for Computational Linguistics, 3645–3650, 2019. https://doi.org/10.18653/v1/P19-1355

Murugesan, S., “Harnessing green IT: Principles and practices,” IT Professional, Vol.10, Issue 1, pp.24–33, 2008. https://doi.org/10.1109/MITP.2008.10

Luccioni, A. S., et. al., “Quantifying the carbon impact of AI: A review and practical perspective,” arXiv preprint arXiv:2104.10350. 2022. https://doi.org/10.48550/arXiv.2104.10350

Kahhat, R., Kim, J., Xu, M., Allenby, B., Williams, E., & Zhang, P., “Exploring e-waste management systems in the United States,” Resources, Conservation and Recycling, Vol.52, Issue. 7, pp.955–964, 2008.https://doi.org/10.1016/j.resconrec.2008.01.002

Luccioni, A. S., et. al. “Quantifying the carbon impact of AI: A review and practical perspective,” arXiv preprint arXiv:2104.10350, 2022. https://doi.org/10.48550/arXiv.2104.10350

Schwartz, R., Dodge, J., Smith, N. A., & Etzioni, O., “Green AI,” Communications of the ACM, Vol.63, Issue 12, pp.54–63, 2020. https://doi.org/10.1145/3381831

Ahmed, S., & Wahed, M., “Democratizing artificial intelligence for the future,” Patterns, Vol.1, Issue 7, 100108, 2020 https://doi.org/10.1016/j.patter.2020.100108

Strubell, E., Ganesh, A., & McCallum, A., “Energy and policy considerations for deep learning in NLP”, Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pp.3645–3650, 2019. https://doi.org/10.18653/v1/P19-1355

Menghani, G., “Efficient deep learning: A survey on making deep learning models smaller, faster, and better,” ACM Computing Surveys, Vol.55, Issue 12, pp,1-37, 2023.

Amodei, D., & Hernandez, D., “AI and compute,” OpenAI Blog. https://openai.com/research/ai-and-compute, 2018

Patterson, D., Gonzalez, J., Le, Q., Liang, C., Munguia, L. M., Rothchild, D., ... & Dean, J., “Carbon emissions and large neural network training,” arXiv preprint arXiv:2104.10350. 2021. https://doi.org/10.48550/arXiv.2104.10350

Anthony, L., Kanding, B., & Selvan, R., “Carbontracker: Tracking and predicting the carbon footprint of training deep learning models,” arXiv preprint arXiv:2007.03051. 2020. https://doi.org/10.48550/arXiv.2007.03051

Henderson, P., Hu, J., Romoff, J., Brunskill, E., Jurafsky, D., & Pineau, J., “Towards the systematic reporting of the energy and carbon footprints of machine learning,” Journal of Machine Learning Research, Vol.21, Issue 248, pp.1–43, 2020.

Blalock, D., Ortiz, J. J. G., Frankle, J., & Guttag, J., “What is the state of neural network pruning? Proceedings of Machine Learning and Systems, Vol.2, pp.129–146, 2020.

Gholami, A., Kim, S., Dong, Z., Yao, Z., Mahoney, M. W., & Keutzer, K., “A survey of quantization methods for efficient neural network inference,” arXiv preprint arXiv:2103.13630, 2021.

Hinton, G., Vinyals, O., & Dean, J., “istilling the knowledge in a neural network,” arXiv preprint arXiv:1503.02531. 2015. https://doi.org/10.48550/arXiv.1503.02531

Sanh, V., Debut, L., Chaumond, J., & Wolf, T., “DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter,” arXiv preprint arXiv:1910.01108. 2019. https://doi.org/10.48550/arXiv.1910.01108

Sun, Z., Yu, H., Song, X., Liu, R., Yang, Y., & Zhou, D., “MobileBERT: A compact task-agnostic BERT for resource-limited devices,” Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp. 2158–2170, 2020. https://doi.org/10.18653/v1/2020.acl-main.195

Tan, M., & Le, Q., “EfficientNet: Rethinking model scaling for convolutional neural networks,” Proceedings of the 36th International Conference on Machine Learning, pp.6105–6114, 2019.

Prechelt, L., “Early stopping-but when? Neural Networks: Tricks of the Trade, pp.55–69, 1998. https://doi.org/10.1007/3-540-49430-8_3

Zoph, B., Ghiasi, G., Lin, T. Y., Cui, Y., Liu, H., Cubuk, E. D., & Le, Q. V., “Rethinking pre-training and self-training,” Advances in Neural Information Processing Systems, Vol.33, pp.3833–3845, 2020.

Li, L., Jamieson, K., Rostamizadeh, A., Gonina, E., Hardt, M., Recht, B., & Talwalkar, A., “Hyperband: A novel bandit-based approach to hyperparameter optimization,” Journal of Machine Learning Research, Vol.18, Issue 185, pp.1–52, 2017.

Cheng, Y., Wang, D., Zhou, P., & Zhang, T., “Model Compression and Acceleration for Deep Neural Networks: A Survey,” arXiv preprint [arXiv:2308.06767], 2024.

https://arxiv.org/abs/2308.06767

Tushar, V., Singh, P., & Joshi, A., “Energy-Aware Quantization for Edge Intelligence,” In Proceedings of the Green AI Workshop at NeurIPS 2024.

https://tusharma.in/preprints/Greens2024.pdf

Gupta, A., & Agarwal, S., “On Efficient Knowledge Transfer in Deep Learning: A Study on Distillation for Resource-Constrained Devices,” ACM Transactions on Intelligent Systems and Technology, 2024.

https://dl.acm.org/doi/10.1145/3644815.3644966

Zhang, S., Liu, X., et al., “Efficient Vision Models: A Survey,” ResearchGate, (2023)..

https://www.researchgate.net/publication/371311007

Oladipo, O. et al., “Energy-Efficient Deep Learning via Early Stopping Mechanisms,” ITM Web of Conferences, ICACS-2024.

https://www.itm-conferences.org/articles/itmconf/pdf/2024/07/itmconf_icacs2024_01003.pdf

Smith, S. L., Kindermans, P.-J., Ying, C., & Le, Q. V., “Don't Decay the Learning Rate, Increase the Batch Size,” ACM Transactions on Machine Learning, 2021.

https://dl.acm.org/doi/10.1145/3487025

Feurer, M., & Hutter, F., “Hyperparameter Optimization,” In Automated Machine Learning, Springer, 2020.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7396641/

Lacoste, A., Luccioni, A., Schmidt, V., & Dandres, T., “Quantifying the carbon emissions of machine learning,”. arXiv preprint arXiv:1910.09700, 2019. https://doi.org/10.48550/arXiv.1910.09700

Luccioni, A. S., Schmidt, V., & Lacoste, A., “Estimating the carbon footprint of machine learning training: A tool and a methodology,” arXiv preprint arXiv:2104.10350, 2022. https://doi.org/10.48550/arXiv.2104.10350

Jouppi, N. P., Young, C., Patil, N., Patterson, D., Agrawal, G., Bajwa, R., ... & Laudon, J., “A domain-specific supercomputer for training deep neural networks,” Communications of the ACM, Vol.63, Issue 7, pp.67–78, 2020. https://doi.org/10.1145/3360307

Mattson, P., Reddi, V. J., Cheng, C., Coleman, C., Kanter, D., Micikevicius, P., ... & Jouppi, N., “MLPerf: An industry standard benchmark suite for machine learning performance,” IEEE Micro, Vol.40, Issue 2, pp.8–16, 2020. https://doi.org/10.1109/MM.2020.2977146

Menghani, G., “Efficient deep learning: A survey on making deep learning models smaller, faster, and better,” ACM Computing Surveys, Vol.55, Issue 12, pp.1-37, 2023.

Wang, J., Yu, H., Wen, Z., & Zhang, S., “Federated learning with matched averaging,” arXiv preprint arXiv:2002.06440, 2019.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 Olutayo Ojuawo, Jiboku Folahan Joseph

This work is licensed under a Creative Commons Attribution 4.0 International License.

Authors contributing to this journal agree to publish their articles under the Creative Commons Attribution 4.0 International License, allowing third parties to share their work (copy, distribute, transmit) and to adapt it, under the condition that the authors are given credit and that in the event of reuse or distribution, the terms of this license are made clear.